AWS-EKS-06--安装 Amazon EFS CSI 驱动程序

摘要

-

本文介绍为EKS集群安装 Amazon EFS CSI 驱动程序

-

参考资料:

EFS和EBS的选择?

-

如果是同一个Pod内的多个容器之间的存储共享,您可以考虑使用EBS卷。

-

如果是不同的Pod之间的存储共享,此时由于可能跨可用区,您可以考虑使用EFS文件系统。

安装 Amazon EFS CSI 驱动程序

-

创建 IAM policy和角色

1 | # 从 GitHub 下载 IAM policy 文档 |

-

安装 Amazon EFS 驱动程序

1 | # 添加 Helm 存储库。 |

创建 Amazon EFS 文件系统

-

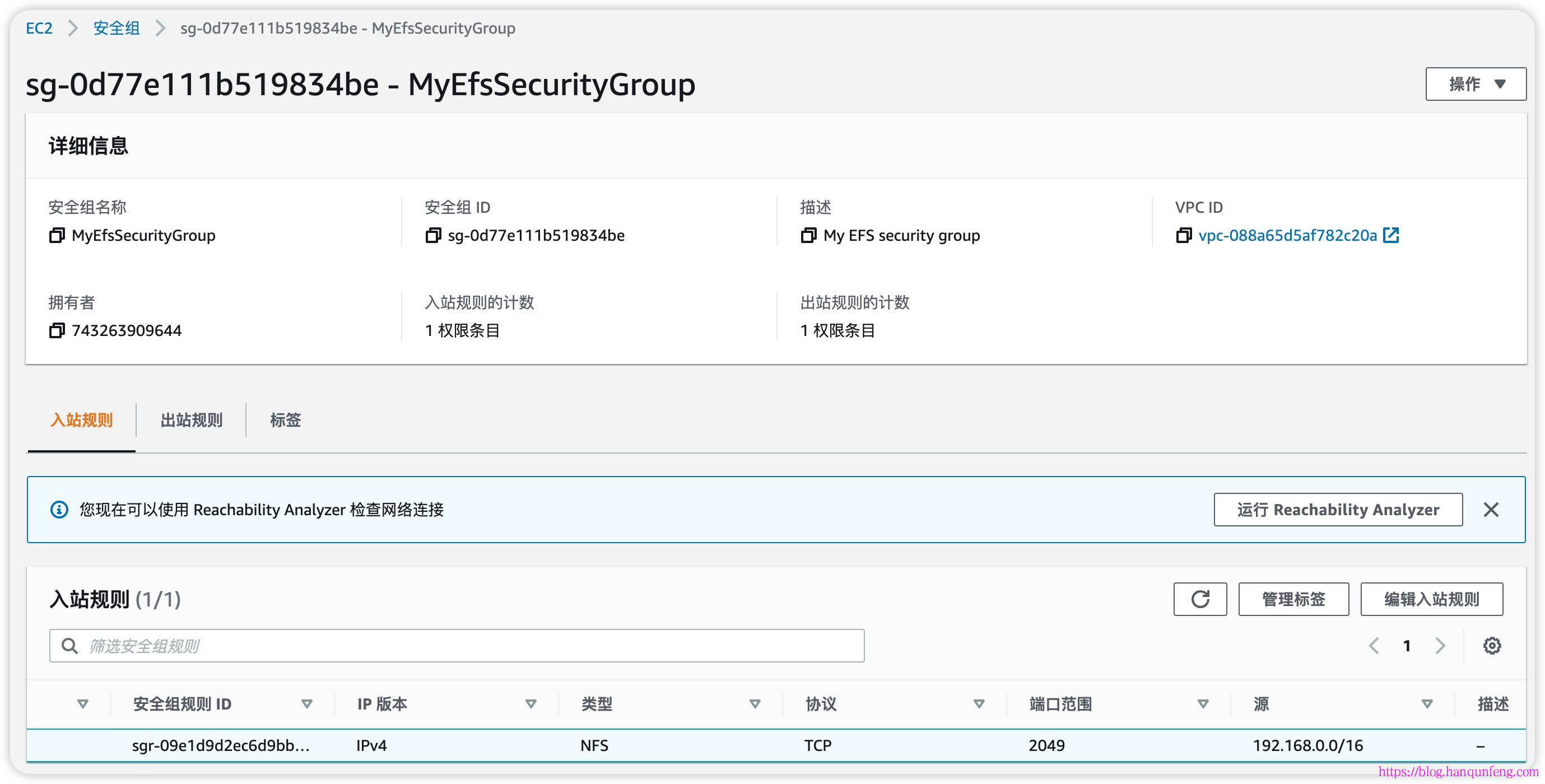

创建安全组

1 | # 检索您的集群所在的 VPC ID,并将其存储在变量中,以便在后续步骤中使用。 |

-

创建EFS

1 | # 创建文件系统 |

EFS挂载目标和安全组(网络配置)

-

确定集群中节点所在的子网的 ID 以及子网所在的可用区

1 | # 创建挂载目标 |

-

为节点所在的子网添加挂载目标

1 | # 为每个 AZ 中有节点的子网运行一次挂载命令,注意替换相应的子网 ID,所以这里两个节点都要创建挂载目标 |

删除EFS

-

在使用AWS CLI命令删除文件系统之前,必须先删除为文件系统创建的所有装载目标和接入点。

-

删除现有的挂载目标

1 | # 注意要替换 --mount-target-id fsmt-0368ff60b5e4c39ed,要删除几个挂载目标就执行几次命令 |

-

删除efs

1 | # 控制台也可以删除 |

测试

Dynamic-demo

-

检索您的 Amazon EFS 文件系统 ID。您可以在 Amazon EFS 控制台中查找此信息,或者使用以下 AWS CLI 命令。

1 | # 检索您的 Amazon EFS 文件系统 ID |

-

创建storageclass

1 | # storageclass.yaml,fileSystemId: fs-09447193939058538 |

-

创建pvc,指定 storageClassName: efs-sc

1 | # pvc.yaml |

-

创建pod

1 | # pod.yaml |

-

查看

1 | # 查看pv |

-

此时只删除pod并重新创建pod,则之前的磁盘数据还在。如果删除pvc并重建pvc,则原先的数据就没有了。

-

删除测试用例

1 | $ k delete -f pod.yaml |

Static-demo

1 | # 示例项目 |